For weeks, Twitter has been promising that it would be ending its “legacy” verification programme – the one that actually verifies users – and stripping the status from any user who didn’t pay.

Although the cut-off for the switchover was 1 April, the company didn’t seem to be joking. “We will begin winding down our legacy verified program and removing legacy verified checkmarks,” it posted on its official channels. “To keep your blue checkmark on Twitter, individuals can sign up for Twitter Blue.”

Twitter Blue is, of course, Twitter’s paid-for subscription service. It offers its own form of “verification”, which requires users to submit a working phone number.

Elon Musk, the company’s increasingly panicked owner and chief executive, has been quite clear on the motivation for the switchover: he views the blue check as a status symbol that has been unfairly handed out to members of a cultural elite, and – a worse sin – inefficiently given away for free when it has monetary value.

But then something funny happened: 1 April came and went without the blue checks disappearing.

That’s not quite true, actually. Exactly one “legacy” verified user lost its checkmark when the New York Times was stripped of its status. Musk directly intervened after a Twitter user pointed out the newspaper had reportedly committed to not paying.

No other account that had made similar commitments has yet lost their blue tick, and there have been many, including basketball player LeBron James, who tweeted: “Welp guess my blue ✔️ will be gone soon cause if you know me I ain’t paying the 5. 🤷🏾♂️”

In a follow-up tweet, rapidly deleted, Musk appeared to create a new policy on the fly, promising one user that Twitter would give verified accounts “a few weeks grace, unless they tell they won’t pay now, in which we will remove it [sic]”.

On Sunday, the day after the deadline, Twitter did push one change through to hit verified accounts, by removing the distinction between Twitter Blue and legacy users. Now, any user with a verified checkmark has the same description: “This account is verified because it’s subscribed to Twitter Blue or is a legacy verified account.”

It’s not hard to see the progression of events here. Musk’s belief that blue checkmarks are a desirable status symbol in and of themselves was the justification for selling them through Twitter Blue, but subscriber numbers for the service have been low: about 300,000 users have the Blue verified checkmark.

And so the company tried to juice signups by forcing legacy verified users, of which there are about 400,000, to pay. But legacy users … didn’t. Because the value of a legacy checkmark was many things – a mark of importance, proof that you were who you said you were, a way of ignoring journalists on Twitter – but they were all a function of its limited availability.

Merging the public-facing side of the two different systems, so that users can no longer tell who has a paid-for verification and whose came under the old regime, does little to make legacy users pay. But it does finally undercut any remaining value the previous system had retained.

For a few hours on Sunday, a verified New York Times account did end up reappearing on the social network – after former Simpsons writer Bill Oakley changed his display name and profile picture to that of the paper. As for Twitter under Musk? It seems unlikely that even he knows what the next plans are. Likes are now florps?

Oh, no, wait: as I write this, he’s just changed the Twitter logo to a badly-rendered version of the Dogecoin logo in an apparently days-late April Fools gag. What a cool guy.

ChatCreepyT

Italy has banned ChatGPT over concerns that OpenAI gathered and used personal data to train its underlying AI without proper consent. From our story:

The Italian watchdog cited concerns about how the chatbot processed information in its statement.

It referred to “the lack of a notice to users and to all those involved whose data is gathered by OpenAI” and said there appears to be “no legal basis underpinning the massive collection and processing of personal data in order to ‘train’ the algorithms on which the platform relies”.

On Monday, the UK’s ICO expressed its own concerns, though stopped well short of a follow-up ban:

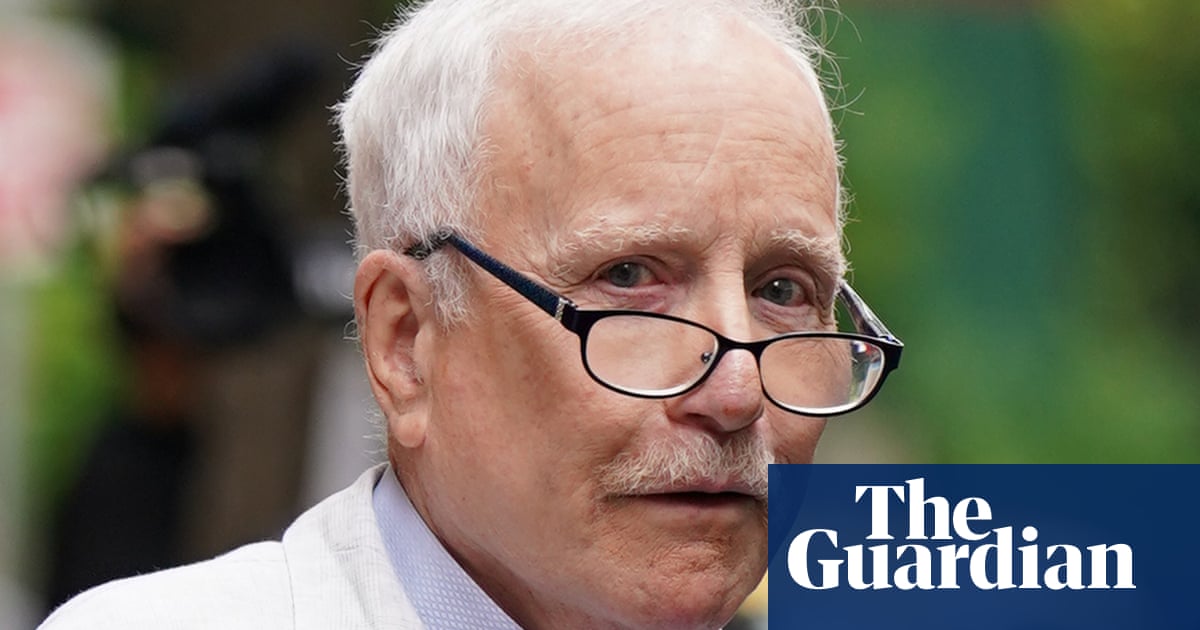

‘There really can be no excuse for getting the privacy implications of generative AI wrong. We’ll be working hard to make sure that organisations get it right,” said Stephen Almond, the ICO’s director of technology and innovation.

“It doesn’t take too much imagination to see the potential for a company to quickly damage a hard-earned relationship with customers through poor use of generative AI.”

OpenAI is incredibly secretive about the data it uses to train its latest versions of GPT. For a previous release, GPT-3, the company revealed in general terms that it had used two repositories of ebooks, the content of the English language wikipedia, and about 300 billion words scraped from the open web, but it gave little further detail.

This time round, it declined to even give that level of detail, arguing that because of “the competitive landscape and the safety implications of large-scale models like GPT-4” it needed to give no further information than the fact that the training data included “both publicly available data (such as internet data) and data licensed from third-party providers”.

For a while I’ve been convinced that this approach would end in a bruising battle over copyright. OpenAI conspicuously does not make assurances like those that Adobe does with its Firefly image generator – that all training data is appropriately licensed. Instead, the company is betting that its use of copyrighted material is covered by fair use, while also keeping the specifics just hazy enough to prevent anyone from having standing to sue.

But the Italian ban suggests an alternative risk for anyone training large language models: data protection. If you’re scraping data from the internet then, unless you’re careful, you’re almost certainly scraping personal data from the internet, and maybe even scraping “special categories” of personal data – information about an individual’s sex life or race, for instance – which carries massive protections. It’s not creepy at all to think about an AI inferring details about your sex life after scraping your Instagram posts.

And unlike copyrighted material, there’s no concept of fair use for such data. So can OpenAI commit to not scraping personal data or using it to train its AI? The best the company can say is “we actively work to reduce personal data in training our AI systems like ChatGPT”. Convincing.

after newsletter promotion

Conned

E3, North America’s largest video game conference, is no more. From The Verge’s story:

Gaming’s big summer show was set to return in person in Los Angeles for the first time since 2019, but it’s been called off after huge gaming companies like Nintendo, Microsoft, and Ubisoft all said they wouldn’t be participating in the event.

The convention hasn’t been fully shuttered, with organisers holding out hope for a return in 2024, but after four straight years off, it’s increasingly clear that any revival will need to be rebuilt from scratch.

This is first and foremost a video games story, and after two decades of the annual gaming calendar being built around splashy summer showcases, the absence of E3 has already started to have ramifications in launch windows, marketing campaigns and air miles flown by journalists and developers.

But I think that gaming is frequently a harbinger of the future of media, and that’s true here. The proximal cause of E3’s death was Covid, but the convention has been increasingly damaged by a fundamental realignment of the relationships between press, publishers, and public. Ever since Apple popularised the idea of a press conference made for public consumption, the gaming industry has been eagerly adopting it, with Nintendo leading the way.

By 2019, the last year of E3 and my first visit, the bulk of the show’s major announcements were live-streamed to the public in standalone events with little intrinsic link to the convention itself. You still needed to be there to get hands-on with the titles, but Covid shifted the norm there, as well, forcing publishers to work out how to get preview builds to journalists remotely – or increasingly pushing them to adopt an “early access” model or a revival of the public game demo, both of which cut out the media entirely.

“Going direct” is nothing new, but the death of E3 is perhaps the strongest sign to date that it’s here to stay.

For more on E3, subscribe to Pushing Buttons, where Keza MacDonald will offer her thoughts tomorrow

The wider TechScape

GQ has an interview with Apple’s Tim Cook, the anti-Elon. You won’t learn that much new from a man who keeps his cards this close to his chest, but it’s an interesting character study anyway.

Good news: AI can create diverse models for the fashion industry! Bad news: that doesn’t really help anyone but the fashion industry.

How AI trained on overwhelmingly American images is reproducing a visual monoculture of American ideals.

“Natural Selection Favors AIs over Humans”. Dan Hendrycks is yet another serious AI researcher raising serious concerns about the direction of this field.

Binance is subject to a US governmental complaint and it’s messy as hell.

1 year ago

61

1 year ago

61

English (US)

English (US)